Autonomous Toy Car

May 01, 2013 | 3 minute read

For a final project in a Computational Cognitive Science Course, I convinced my friends to equip a toy car so that it could navigate autonomously.

TL;DR

Here's a video that walks through the process of completing this project.

Project Definition

During this Computational Cognitive Science course, we had thoroughly reviewed neural networks and other AI/ML techniques. As a final project, we were asked to implement a demonstration of what we had learned. Simply implementing some algorithms to make the equivalent of a Not Hotdog App seemed boring. Instead, I wondered if we might be able to attach a camera to a toy car and train a neural network to navigate a simple road course.

I convinced two friends to join me, and after a quick Amazon purchase, we were on our way.

Design

Data Collection

To collect images wirelessly from the car, we attached an iPhone to it and used an app to stream pictures over wifi to a laptop.

At first, electrical tape and twist-ties got the job done.

It was a crude setup, but it worked and removed some of the more difficult networking challenges we would have otherwise had to deal with.

Car Control

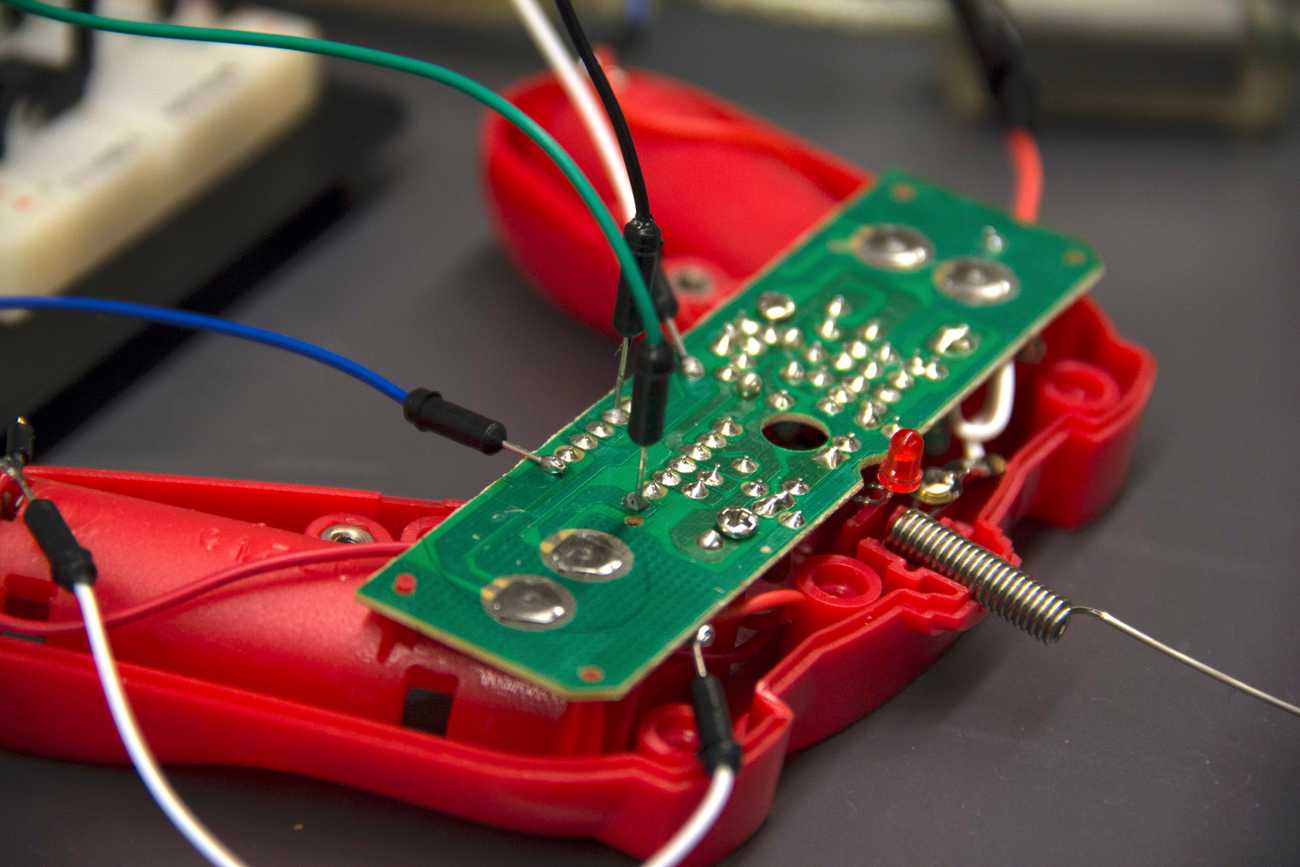

From here, we used MATLAB to load the image into a program from which we would control the car. To control the car, we broke open its remote control to gain access to the electrical components.

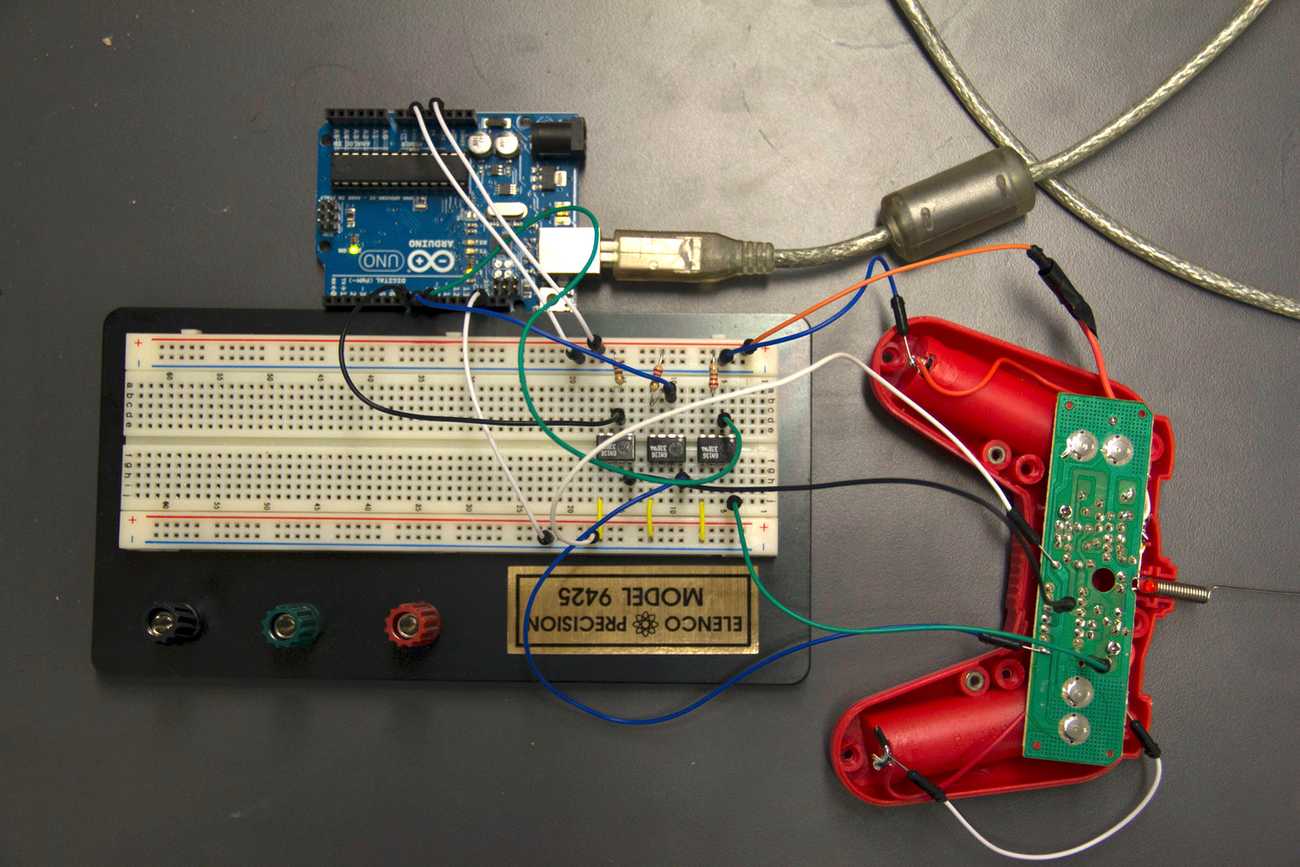

An initial breadboard prototype of our hacked radio controller.

Using some transistors, we overrode the controller's physical buttons, allowing an input from an Arduino to command the car to move forward to the left, right, or straight-on.

Using a library that allowed MATLAB to send commands to an Arduino, we wrote a small MATLAB script that could command the car, via an Arduino, based on manual inputs from a human, or from an algorithmic decision.

Training

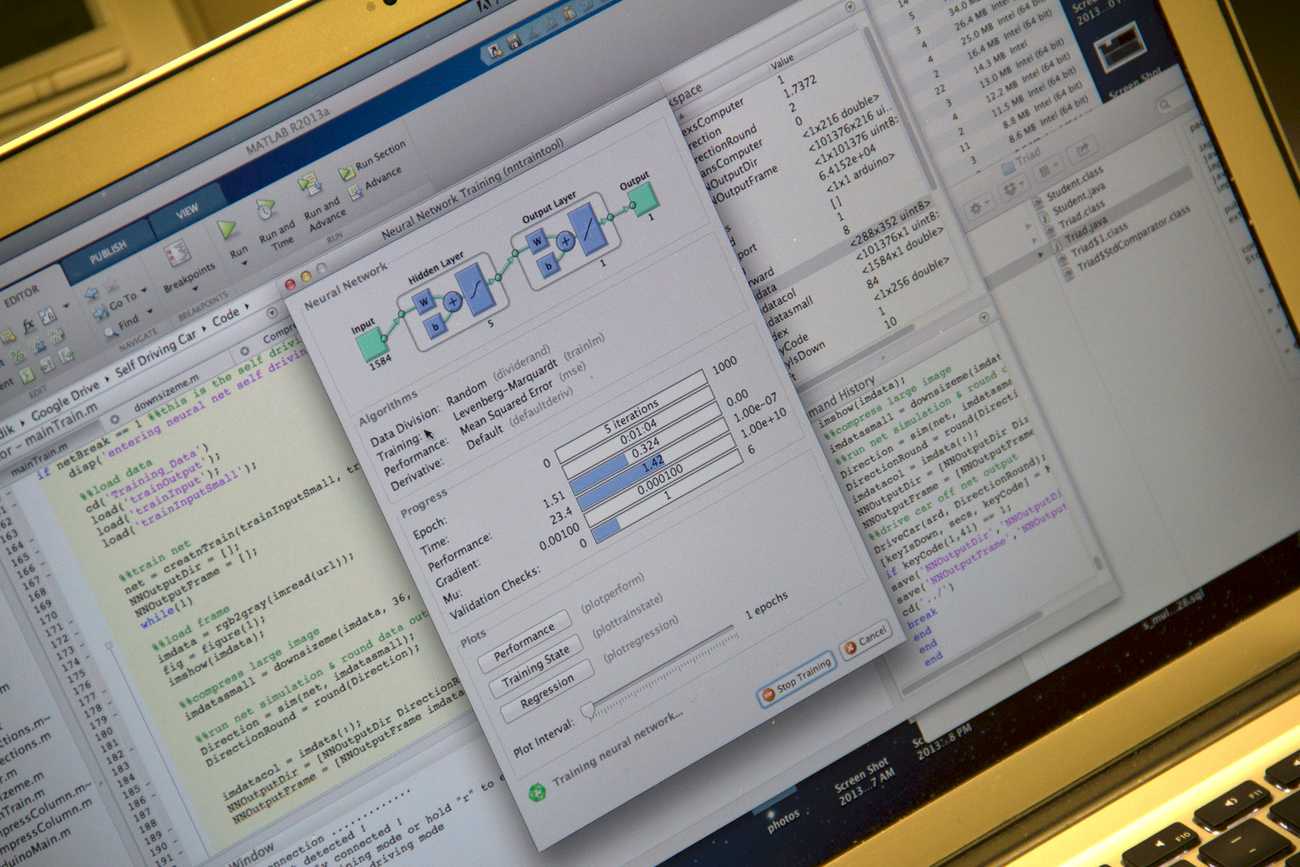

Our first challenge was to create a corpus of training data. Putting our MATLAB program into a training mode, the car's camera would feed us a single image, and we would respond with a left, right, or straight command, which would be saved and associated with that image.

We constructed our road courses from printer paper laid on the ground.

After running the car through several courses and building up a training corpus, we were ready to train a neural network.

Testing

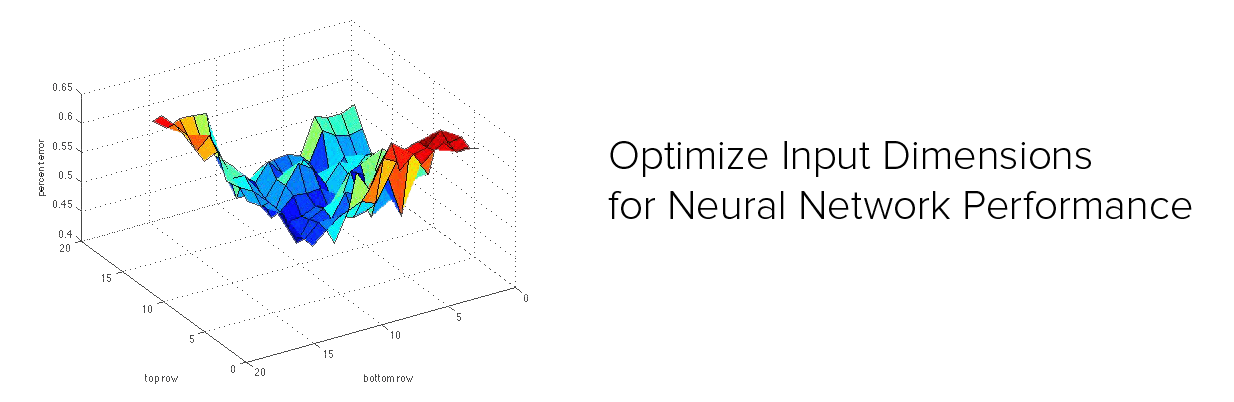

Next, we needed to test how well our neural network matched commands to new data. We saw an opportunity to reduce our test error by cropping input images to remove unnecessary information. Trying permutations cropping the top and bottom of our training images, we identified that removing six rows of pixels from the top and bottom was optimal.

Running

With our neural network fully trained, we were able to run the car autonomously. Given a variety of course layouts, it was able to successfully navigate on its own.

As a final asset, alongside the video shared at the top of this page, we produced a detailed PDF writeup of this project.

Download Writeup